Apache Spark — Column Object

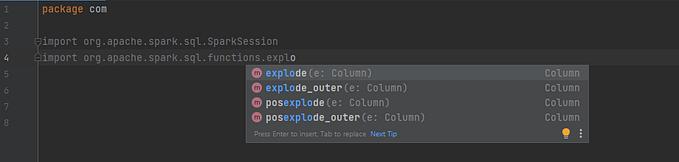

There are many ways to create a column object in Apache Spark.

Let’s explore.

A column can be created using the col & column function.

Another way is by creating dollar($)/symbol — backtick(`) prefixed strings. This will only work if you have imported the spark.implicits conversion. Check this blog for spark implicits link

In spark-shell in scala spark.implicits is already imported. So you directly create columns using $ strings.

Spark Implicits has member:

implicit class StringToColumn extends AnyRef Converts $"col name" into a Column.implicit def symbolToColumn(s: Symbol): ColumnName An implicit conversion that turns a Scala Symbol into a Column.See below example:

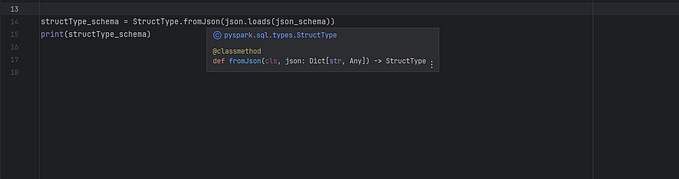

We can also create TypedColumns (having encoder for type). TypedColumn is created using as operator on a Column.

def as[U](implicit arg0: Encoder[U]): TypedColumn[Any, U] Provides a type hint about the expected return value of this column. This information can be used by operations such as select on a Dataset to automatically convert the results into the correct JVM types.Till now we have seen columns which are generic columns not tied with any Dataframe.

+------------------------------+

| columns |

+------------------------------+

| $”first_name” |

| `first_name |

| col(“first_name”) |

| column(“first_name”) |

+------------------------------+A new column can be constructed based on the input columns present in a DataFrame:

Extracting a struct field:

Column objects can be composed to form complex expressions: